The Darpa Robotics Challenge – Just RC-controlled toys?

Pretty much every IT and news Blog I saw in the last days had some coverage on the Darpa Robotics Challenge 2013 trials, including BBC.

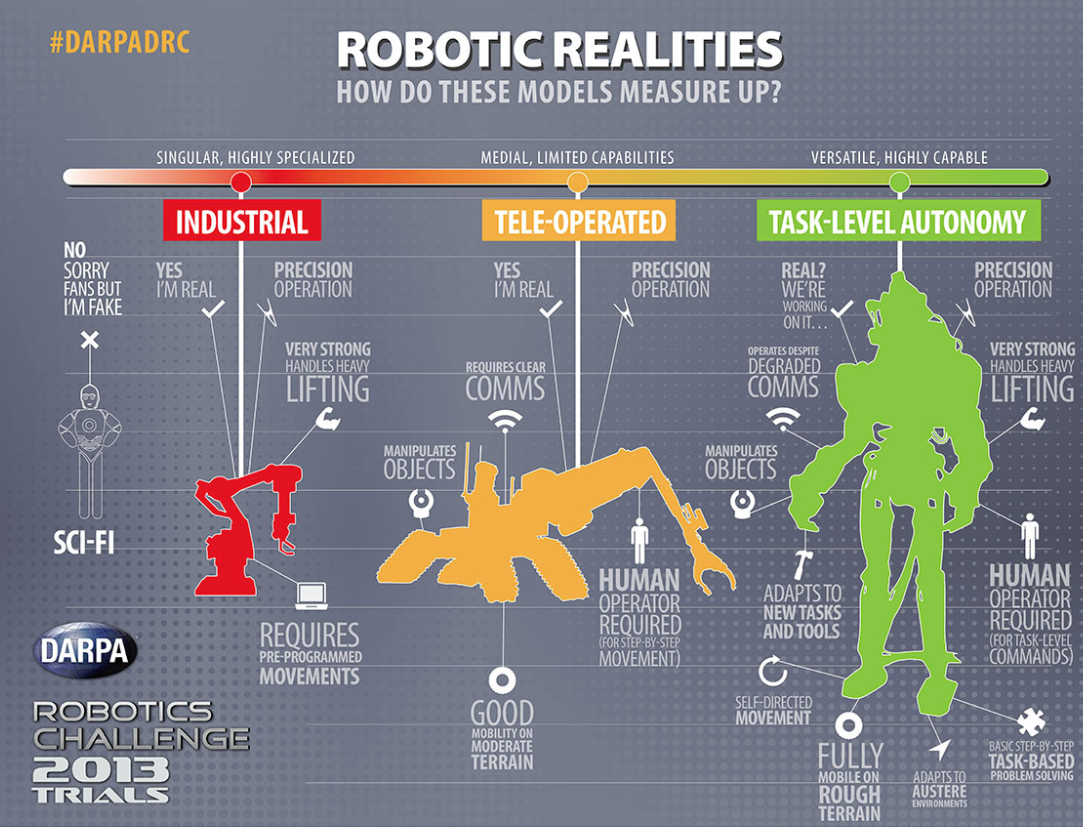

What I am missing in all of the reports is an accurate summary of the state of the art of robotics… so what do we have here? Just fancy, expensive RC-controlled toys or autonomous robots that operate on basic instructions such as “climb ladder”?

Lets see:

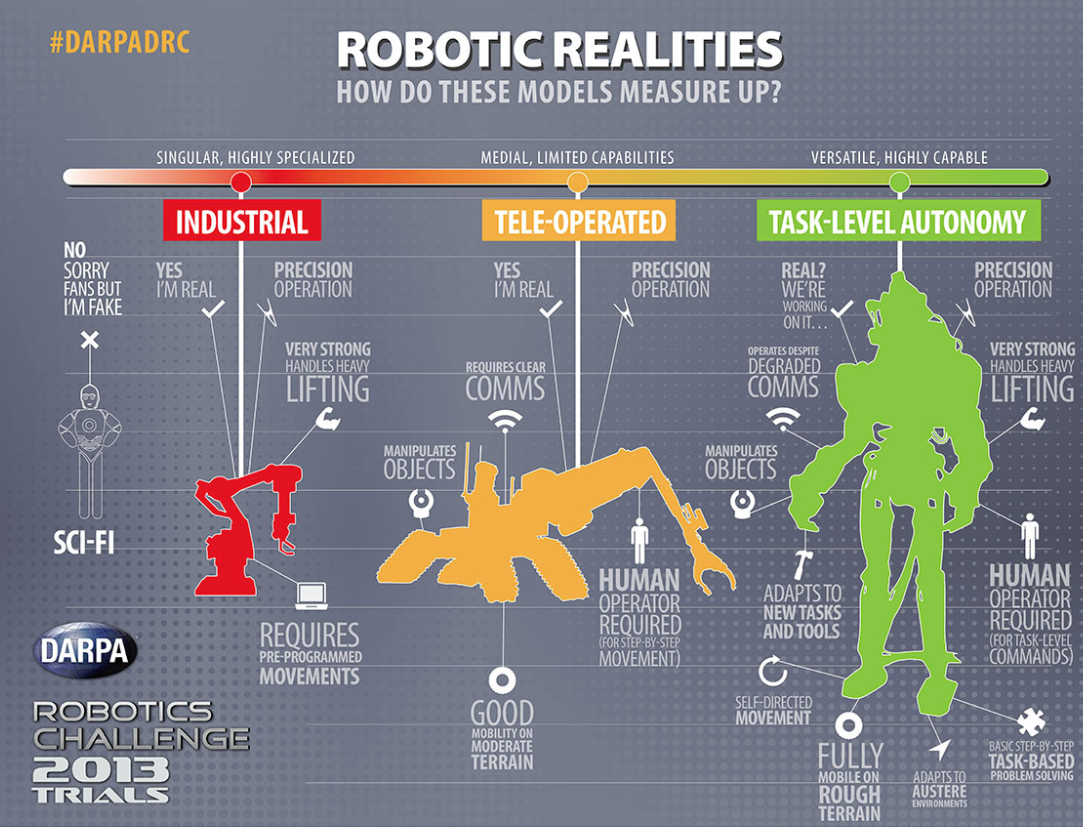

What Darpa wants is clear:

We are looking for “supervised” or “task-level” autonomy. The term means, for example, that a human operator could issue a robot a command like “Open the door” and the robot would be able to complete that task by itself.

Then I was reading the task descriptions:

Every task included “No interventions are allowed”.

Wow.

But wait.

On the other hand the description of the upcoming 2014 Robotics Challenge finals announced that they will make the communication slower. So what now?

First lesson: Intervention and communication is not the same. I should have guessed that. So intervention is, well, intervention, like giving the robot a hand (haha) to pick up a drill. That was not allowed. And whenever it did happen it was logged and the team lost the one possible “no intervention” point per task.

But what about communication?

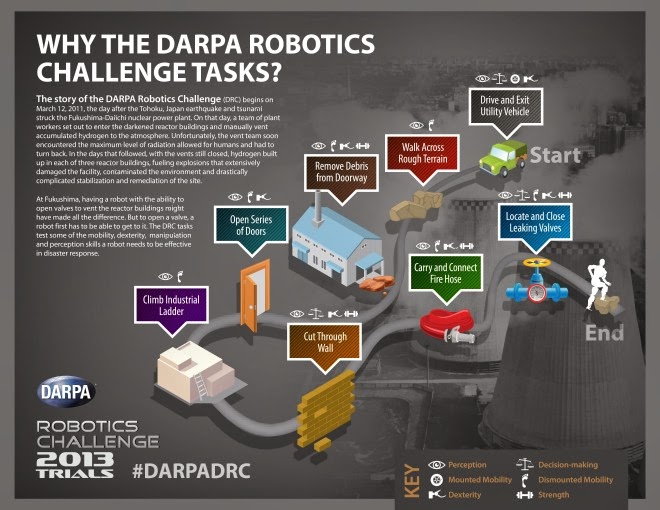

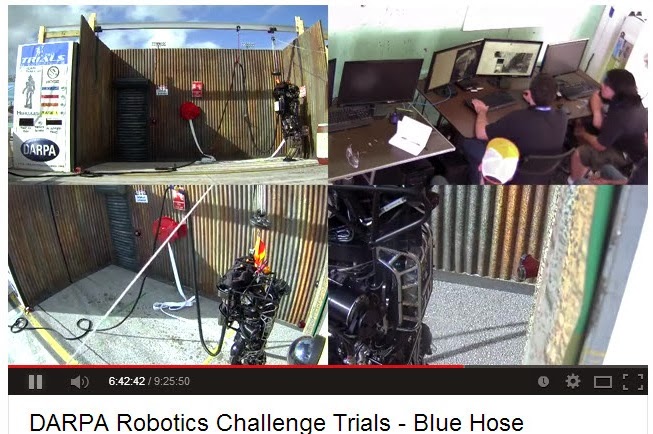

I did little bit of research on that. For that it was useful that the Darpa Youtube channel contains the complete full 2-day live stream coverage. And watching the streams makes it clear what communication is:

Panes 1,3,4 in the video show the robot in action and the top right pane 2 shows each team’s “control room” for the robot. All teams communicated with their robot, but from the Youtube videos alone it is not possible to judge the extent of it, whether it was task-level autonomy, remote intervention or outright remote control/teleoperation.

I would have wished that the videos contained more data on what kind of communication did appear, e. g. a visual indication whenever a command was sent to the robot would have been useful.

Searching more on the web I found an useful eyewitness comment on the IEEE robotics blog:

nagleonce: That’s about what I expected. The teams have only had the real robots for a few months, and the simulator they used before that was unrealistic about contact friction.

However, much of what I’m seeing is even worse than I expected. Boston Dynamics provided the teams with a dumb controller program (as a .so file) which can do a slow, stable walk and stand. There’s also a function for “stand and give manual control to arms”, while maintaining balance. That was intended just as something to use when debugging. The teams were expected to develop better software. Some of the teams seem to be just using that basic program plus teleoperating the robot with a game controller for each task. I’m watching Team Trooper doing the debris-removal event. Except for walking, they are 100% teleoperating. It’s really slow because DARPA puts lag in the data path to discourage teleoperation.

And over at Slashdot another eyewitness reported:

Spiked_Three: The robots themselves, were lacking. Not the hardware platforms, they seemed ok, but the software sucked. Dabbling around robotics myself, I understand why, and acknowledge the teams efforts. But the fact remains; even for a first attempt, I saw nothing ‘promising’. We (as a planet) spend too much emphasis on blinking light arduinos, and far too little time encouraging software skills. Again, from personal experience, I can see how the teams had to use compilers with no remote debugging, probably archaic monolithic code (‘C’ and Assembler), hard to use cross compiling, and basically the kind of stuff you would only force on a development team if you wanted them to fail.

And while I said I thought the hardware looked OK, I will make an observation; 90% of the time the robots stood there doing nothing, that stupid single LIDAR was spinning its ass off. Was that just to keep its grease warm, or was it indeed a huge bottle neck to have only 1 apparently limited LIDAR? ….Then again, I could be completely wrong.

It seems the Youtube footage along with the comments answered my initial question:

We are still seeing mostly RC controlled toys here.

Unfortunately at the moment I see no way for myself to discover the true amount of teleoperation each team did – except maybe watching 16 hours of low-res Youtube footage. If someone knows more, please let me know in the comments.

Edit: There were some really good comments about this post on reddit, for example:

cs 2818: I was at the challenge, and while I’m not on any of the teams I did visit with a few friends who were on Atlas teams and I got to see what their systems were actually capable of, off the course. There has been work on various pieces of autonomy, but you’ve got to consider this:

The teams were competing for funding to continue on the project. The game was to get as many points as possible in the safest way possible (limit risks taken). It’s not that they didn’t have any autonomous systems in place, it’s that they wanted to be sure the system would do the right thing, so they had a human checking the output of the autonomous system, making adjustments and sending it to the robot. ( at least the operators I watched )… [More]